The “Quite OK Image Format”

and how I got sidetracked down a memory lain and several side project.

If you’re like me, you’ve spent way more time than you’d care to admit wading through the pros and cons of various image formats. You might’ve cut your teeth on BMP (thanks, Windows Paint) or gotten overly familiar with the woes of JPEG compression (hello, artifacts!). And let’s not even talk about those ultra-beast TIFF files, just reading their specs can be a bedtime story for insomniacs. But, before we get into QOI I would like to present to you FIF, another image format that I played around in mid 90’s of the last century.

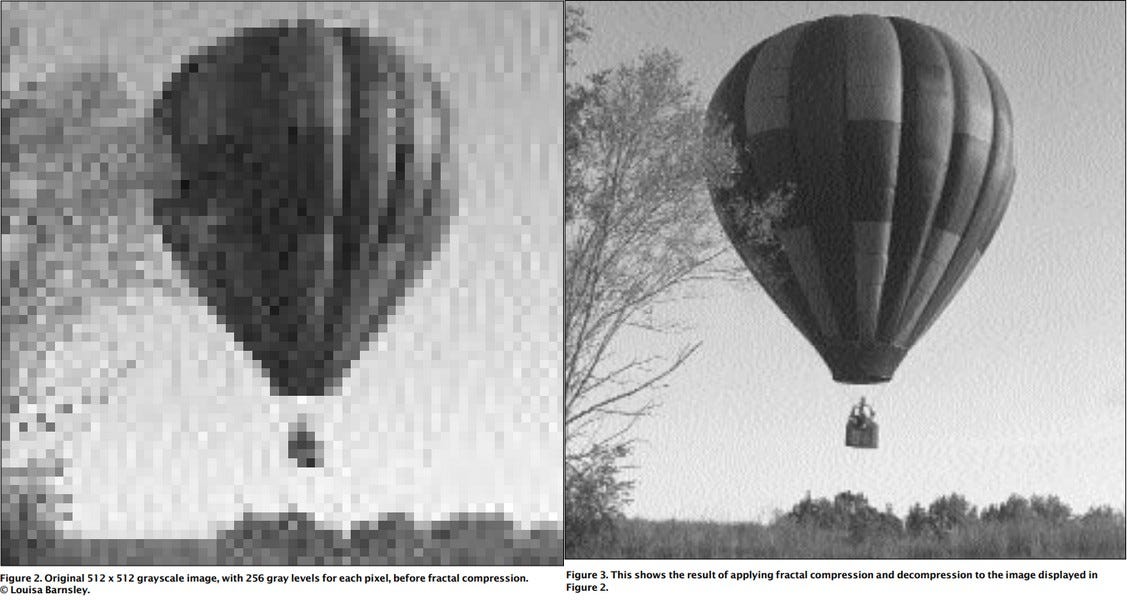

The .fif (Fractal Image Format) has a fascinating backstory. Born out of the 90s excitement for fractal compression and “infinite zoom.” However, real-world hurdles (technical complexity, slow encoding, proprietary licensing) kept it from catching on. Its file size was small, and while compression took some time decompression was fast-ish for that time.

It was created by Michael Fielding Barnsley (Iterated Systems Inc.) and due to lackluster adoption it was bought out and disappeared around the early 2000. I’ve been trying to find what happened to it but it’s a merry-go-round. From Interwoven, to mediabin, to ON1 there is just no information on the web about it. It’s like it disappeared down some black hole of the web. The last decent discussion on the original compression idea was on HackerNews in 2014. In the repo for this post, I will also include the original paper from Dr. Barnsley so it again does not get lost.

I tried to recreate it. Bold, I know, but kept coming against visual artifacts like blockiness, and unsatisfying speed and compression rate. In short, it took waaayyy too long, in order of minutes, to do the calculations and then it was larger than the original input. So, no joy there. At least I learned something. It is somewhat similar to my Image Mosaic work. One of the approaches to using fractal compression is to break an image into smaller and smaller parts and do various transforms on those parts looking for similarities. Compression comes in the form of reusing that part and just recording what transformation (size, rotation color, brightness) was done to it.

After cooling off a little and wallowing in my failure I thought about neural networks and if they could help. Did some research and stumbled upon SIREN, in a paper titled Implicit Neural Representations with Periodic Activation Functions. That’s a mouthful.

SIREN, or Sinusoidal Representation Network, is what happens when someone looks at a regular neural network and says, "You know what this needs? More trigonometry."

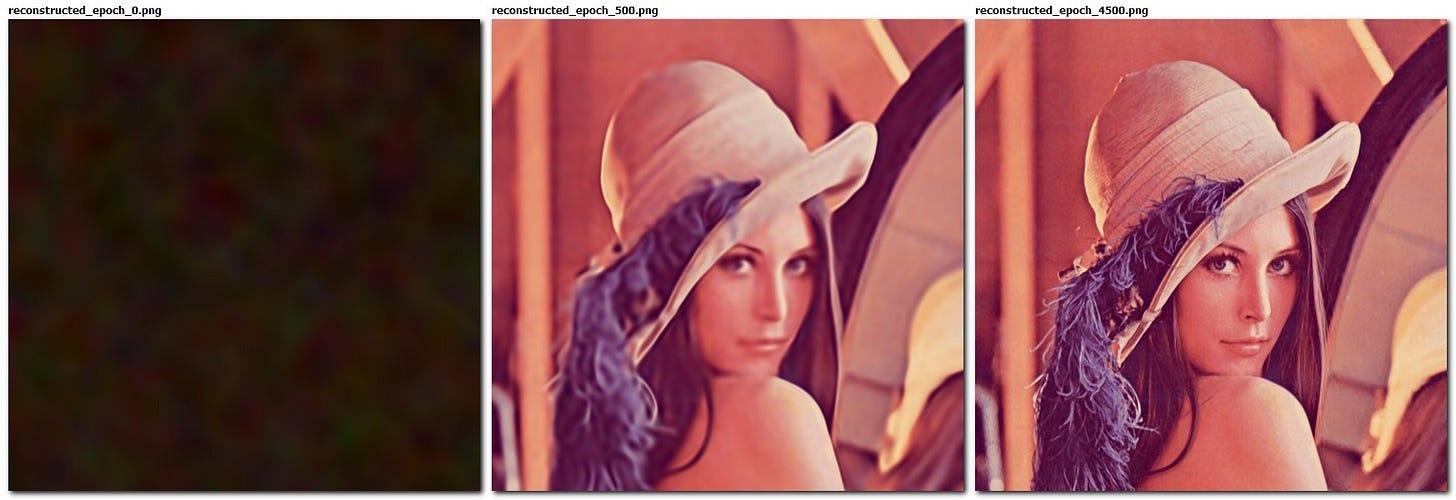

Alright, here’s the breakdown, let’s keep the fun rolling in. I took their research and tried to recreate it. Instead of storing an image as a grid of pixels, I trained a neural network to memorize the whole picture as a continuous function, so you can later ask it for any resolution you want.

Basically, this neural network is turning an image into math. Instead of memorizing pixels like a regular neural net, it learns a continuous function that maps (x, y) coordinates to RGB values. No pixelation, just pure, wavy sine-powered magic.

Here’s how it works:

A normal RGB pixel is represented as:

Each value is divided by 255:

Results in:

This process is applied to every pixel in the image, preserving the (H, W, 3) shape.

That input is transformed using a sine function instead of the usual activation functions like ReLU or Sigmoid. The reason? Sine functions are periodic and smooth, making them perfect for capturing fine details, edges, and complex variations in an image.

W and b are the weight matrix and bias, just like in any normal neural network.

ω is a scaling factor that controls the frequency of the sine function.

A higher ω means sharper details.

A lower ω produces a smoother output.

The sine function ensures that the network doesn't just memorize pixel values but instead learns a continuous function that represents the image.

Training took around 8 min on my, relatively old, computer and GPU for 5000 training Epochs. The input image is 512x512 and then reconstructed to 2048x2048 pixels. The resulting model file that can be used to reconstruct an image at any resolution is 781kb. That is a lot bigger than 65kb of the original but the ability to scale the image up is interesting.

I’m really happy with how this side project turned out. While it is not a compression algorithm I did learn a lot.

Ok, now, finally let’s get to QOI or “Quite OK Image Format”.

It’s rare that a brand-new image format rolls around and immediately sparks excitement across the developer community. Enter QOI: the “Quite OK Image” format that’s way more than okay. It’s just 300 lines of C, 300 lines. Let that sink in.

QOI was created by Dominic Szablewski in 2021. QOI prioritizes speed and simplicity over maximum compression. Like PNG it is lossless meaning that it preserves information about every pixel as is in the original, with no artifacts like JPEG.

Unlike PNG, which uses complex filtering and Deflate compression, QOI encodes images using a run-length encoding (RLE) approach combined with a small 64-slot index to store recently seen pixel values.

Dominic made an excellent post about how he came up with the idea and how it works in a great post on his site. It makes a really interesting and fun read, how from a simple idea something amazing could arise.

TL;DR (dry boring info, check his site for more):

The image is compressed in a single-pass encoding process that handles each pixel sequentially. As the encoder processes the image from top left to bottom right, it utilizes several strategies to efficiently compress the data:

Run-Length Encoding (RLE): When consecutive pixels are identical, QOI records this repetition using a run-length encoding method. This approach stores the repeated pixel value once, along with a count of how many times it repeats, effectively compressing uniform areas within the image.

Pixel Indexing: QOI maintains a 64-slot index of recently encountered pixel values. For each new pixel, the encoder computes a simple hash to determine an index position:

If the current pixel matches the value stored at this index, the encoder writes a reference to this index, reducing the need to store the full pixel data again.

Delta Encoding: For pixels that differ slightly from their predecessors, QOI encodes the difference in color channels rather than the absolute values. This method captures minor variations efficiently by storing the change (delta) from the previous pixel's color channels.

These approaches allow QOI to achieve fast, lossless compression with a minimalistic approach. And the numbers are impressive:

Total for images/photo_wikipedia (AVG)

decode ms encode ms decode mpps encode mpps size kb rate

libpng: 22.9 310.8 47.42 3.49 1799 56.6%

stbi: 26.7 195.6 40.63 5.55 2498 78.6%

qoi: 7.4 11.1 147.02 97.55 2102 66.2%

Grand total for images (AVG)

decode ms encode ms decode mpps encode mpps size kb rate

libpng: 7.0 83.8 66.56 5.54 398 24.2%

stbi: 7.0 60.5 66.63 7.67 561 34.2%

qoi: 2.1 2.9 226.03 161.99 463 28.2%The simplicity of QOI has led to its adoption across various programming languages and platforms. Implementations are available in all programming languages of note, and plugins have been developed for tools like GIMP, Paint.NET, and XNView MP, and support has been integrated into libraries such as SDL_Image. This widespread adoption underscores QOI's appeal as a fast and efficient image compression format.

But its widespread adaptation is only hampered by Chrome and other browsers not yet supporting it and that needs to happen for that format not to go the way of FIF and many others.