While digging around the current state of “publicly” available Neural Networks like GPT-Neo, GPT-3, DALL-E, Stable Diffusion, and others it became clear that there’s a shift coming in the way we will use computers to do creative work. Even now the amount that can be automated is amazing and slightly frightening as this level of “creativity” was never meant to be in the realm of possibility for computers not that long ago.

Just a few years back you had to manually trace out a person if you wanted to remove a background, now AI will do a much better job. If you have a part of a picture and want to fill it in you would need to have the same level of artistry as the original painter to make a good job of it. Now, go to DALL-E and ask it to fill in the missing pieces.

Or if you want to create prize-winning digital art just ask Stable Diffusion to make it for you.

Want to create new music, or write a summary of a book, or a book for that matter, AI has you covered. It’s not great in all of those things just yet but in some cases, it is better than you would think. In not so distant past to be a graphic designer or digital artist, you had to have a predisposition for such work, a talent. Today, you can download NVIDIA Canvas and become the next Bob Ross. You will never truly become Bob but at least you could feel a little closer to his brilliance when you too instruct an AI to paint that happy little cloud.

While true art will still be valued the difficulty of distinguishing AI-created and human-created art has disappeared in the last year.

Now, what can we do to prepare for the future workforce? What work will be needed to be done that Neural Networks can’t do just yet?

In the future, we will see much more digitally generated content as digital content generation enters the mainstream. It will be a tough time as a digital artist as now the quality of your output is not based on your own drawing talent but your knowledge of the neural network you are using to prompt it to draw what you need. I truly believe that prompt engineering is the future of mass digital content creation. As mentioned before, true digital art will always be valued but on an individual basis not if you need to create hundreds of different textures for a 3D model or backdrops.

As soon as I started thinking about Prompt engineering as a future job a scene from Westworld Season 4 episode 1 came to mind where a Storyteller is creating a narrative using voice prompts. She builds the set, characters, and their environment end emotional states by entering prompts by voice. And what’s truly bonkers is that I don’t think that’s beyond our current possibilities.

What Neural Networks today are, are a huge set of possibilities, almost endless. They have the ability to create whatever they want but it’s all locked behind creativity with the intent that such networks don’t have. They can paint as well as Van Gogh but don’t unless prompted to do so and told what to paint. They hold the whole sum of interconnected semantic knowledge of human creativity but are not creative by themselves, at least not in any coherent way.

I’ve been playing with Stable Diffusion, which is just amazing, but there was an issue. I didn’t know just what and how to ask it to do. So, I went on a little journey to see what is out there in terms of user guides for diffusion neural models. And, there’s a lot. There are whole books, and Google docs full of prompt engineering tips and tricks as if this technology has been in the world for decades, not months or a year at best. One of the better shorter overviews can be read in A Taxonomy of Prompt Modifiers for Text-To-Image Generation by Jonas Oppenlaendeer from the University of Jyväskylä, Finland.

To start playing with Stable Diffusion you can do it online but there are Credits and other free-until-you-need-to-pay systems in place to help maintain the computing infrastructure. Or you could install it locally and play with it indefinitely.

While books and docs are all good, for a more visual learning experience there are dedicated websites that help you build your prompt.

The most useful is AI Art. you can filter out styles, lens effects, effects, artists, materials, and a bunch of other inputs that the diffusion neural networks will accept as input. Now, AI Art will not build the prompt for you but will give you an idea of what can be expected from a given item in the prompt.

Next are Lexica.art and Krea. Both hosts already made images and provide prompts that were used to generate images. So, here is a “copy with pride” attitude to learning until you get the grips with how things work. It’s not a bad way to go exploring but it’s less structured for long-term success as a prompt engineer.

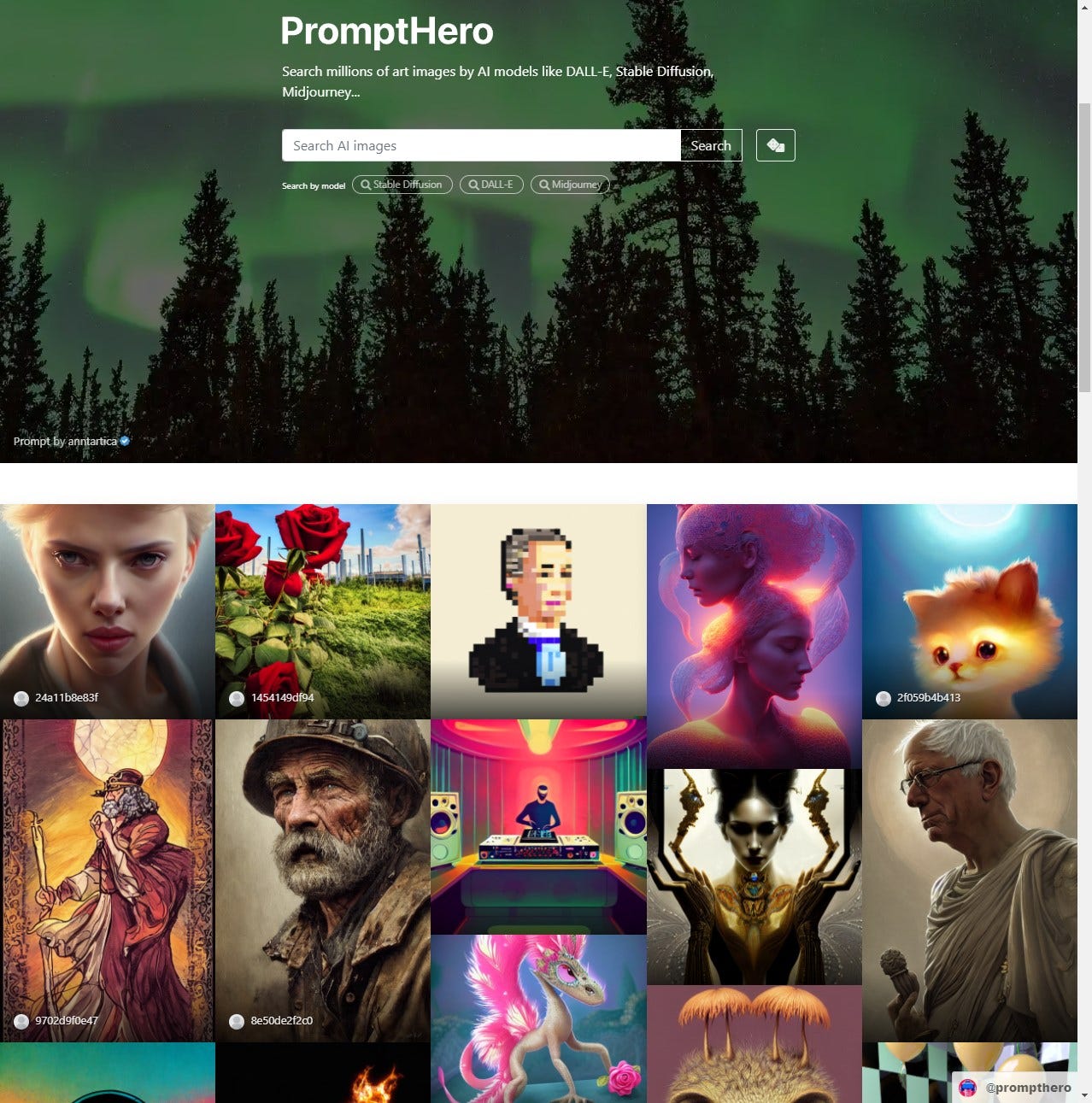

Now, PromptHero is a completely different beast and is much more powerful as a search tool for experimentation. You can, besides standard search, browse based on topic or more important, based on the type of neural network you are using.

That’s another thing to remember, not all diffusion neural networks are made the same or with similar prompts. Most prompts are interchangeable but there could be differences.

prompt:

portrait photo of a asia old warrior chief, tribal panther make up, blue on red, side profile, looking away, serious eyes, 50mm portrait photography, hard rim lighting photography--beta --ar 2:3 --beta --upbeta

Made in: Midjourney v3

PrompthHero offers Academy and a more basic free prompt guide to get you started.

An in-depth course perfect for curious artists that want to get from zero to expert in AI image generation. No previous experience required – we'll walk you through from scratch, step by step. We'll cover everything from the basics to advanced prompt engineering techniques. By the end of the course you'll be generating your own realistic, unique, astonishing images – and you'll know why they work and how to tweak the prompt to make it do exactly what you want.

There are more online sites that collect prompts and I believe their number will only increase.

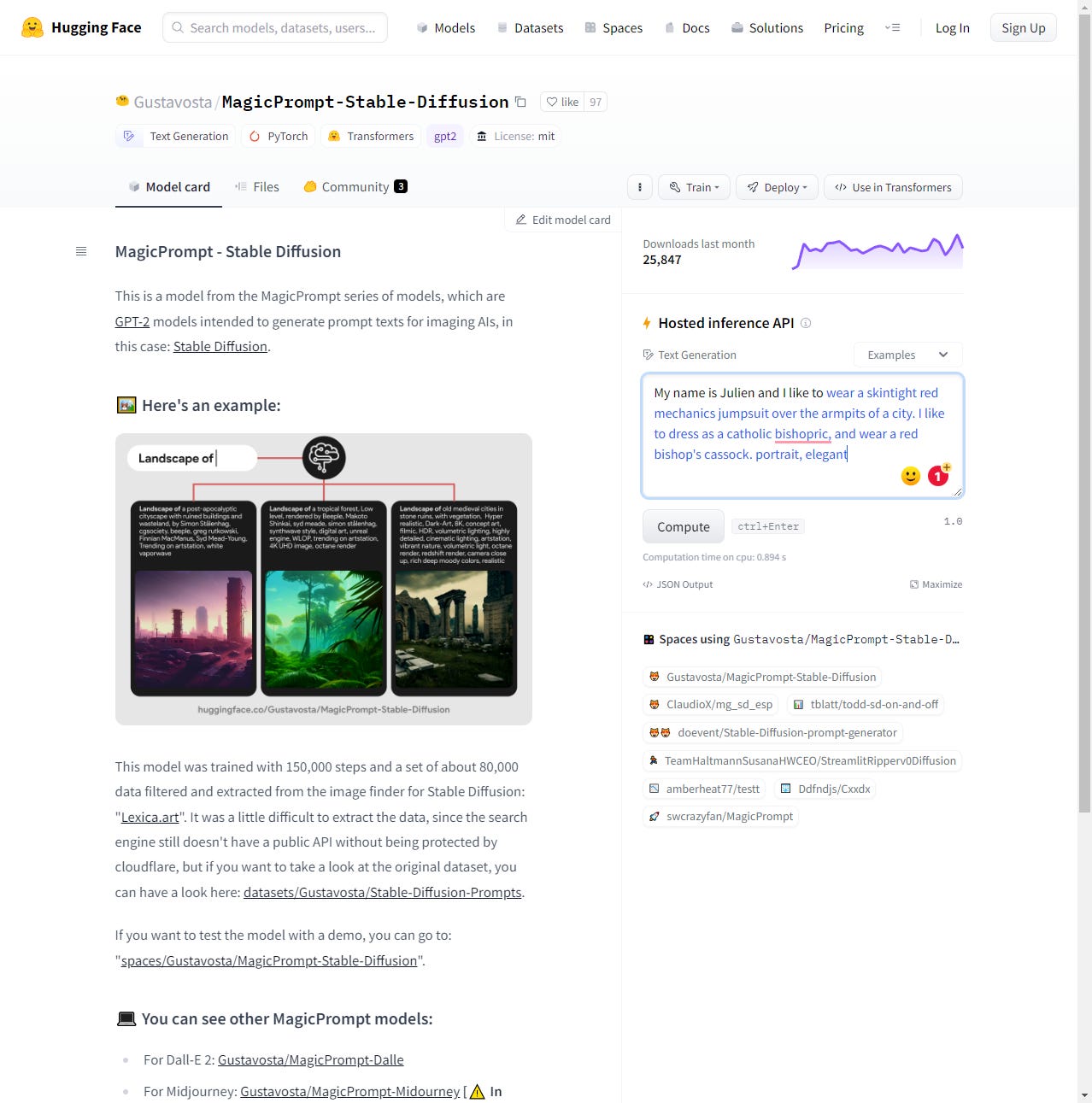

The difficulty of creating prompts is such that people are already using AI to help with that like the MagicPrompt for Stable Diffusion that uses GPT-2 model and was learned on 80,000 data extracted from Lexica.art.

With minimal input, it creates additional prompts for use with Stable Diffusion. For example, providing initial input of “spaceship entering a nebula” and hitting compute it adds “spaceship entering a nebula below planet earth. by piranesi, elegant, highly detailed, centered, digital painting, artstation, concept art, smooth, sharp focus, illustration, artgerm, tomasz alen kopera”.

As you might notice it is not perfect as there is no nebula below planet earth but let’s see what our local Stable Diffusion installation makes from the prompt.

It’s impressive but not what we wanted, we wanted a spaceship entering a nebula. So by slightly modifying the generated prompt to remove the earth reference, we get much closer to our original idea.

If a text-to-image generation is unreal to you this next thing is even more surreal to me. There is this img2prompt where you put in an image and it will tell you what prompt will “most probably” create something like it.

The future of digital creatives is looking really challenging but as T Bone Burnett says, we are humans from earth, we will adapt, and in the end, I’ve been wrong before.

I hope so as I like our species