It was never thought that computers would encroach let alone overtake humans in creating art but here we are. The only thing that AI can’t beat us in is handcrafting objects that are praised for their flaws and random quirks rather than their precision. And that makes me kinda sad for all those art majors and kinda happy for all trade craft kids.

Not to say that genuine new and imaginative art will not be appreciated but even now the difference is so blurred that AI is winning art competitions and prompting a debate over what art is. I can’t remember who said it but I do believe that in the next 5 to 10 years we will be seeing a new kind of “digital artist” whose job description will be a “prompt stylist” or something like that. The same can be said for almost all fields where neural nets can be found, especially if based on a transformer model. They possess large swaths of humanities work and knowledge but to get anything truly useful out of them the user needs to tease it out of them in clever and imaginative ways.

What’s going on?

It all started on 28 September 2022, when access to DALL-E 2 was opened to anyone and the waitlist requirement were removed. And the internet became flooded with high-quality “art”. For more information check out DALL-E 2 and crAIyon post.

With all the power that DALL-E 2 has it is rather hampered by imposed restrictions that were put on it while training. Not to say they weren’t needed as the Internet is a hostile environment for sain people, but we all need some small degree of insanity to function properly. Also, it’s so huge that it can only be accessed through the OpenAI website.

On August 31, 2022, an open-source version of the DALL-E 2 diffusion architecture called Stable Diffusion was released by Ludwig Maximilian University of Munich, and by some estimates, the development of Stable Diffusion took about 5 million Euros of computer processing time. It is in some ways a much more impressive version. For one it’s smaller and can be run locally on some high-end machines with a graphic card with at least 10Gb of video memory. But if you reduce precision from 32bit to 16bit it can be run on machines with graphic cards with much less (4Gb) memory. One restriction is that they need the cores of the Nvidia RTX graphic card.

The Hard way and the Online way

The installation and use of Stable Diffusion are not that difficult but for anyone not confident in using the command line and installing multiple libraries the installation could prove somewhat strenuous. Also, there’s this

Unlike models like DALL-E, Stable Diffusion makes its source code available, along with pre-trained weights.

…

Since visual styles and compositions are not subject to copyright, the general consensus is that users of Stable Diffusion who generate images of artworks from randomly learned data do not infringe upon the copyright of visually similar works, however individuals depicted in generated images may still be protected by personality rights if their likeness is used, and intellectual property such as recognisable brand logos still remain protected by copyright.

The stable Diffusion team offers a test platform similar to DALL-E 2 with some free credits and if you want to use it more you have to pay. It’s called Dream Studio. It’s a good first contact with the technology.

And now …

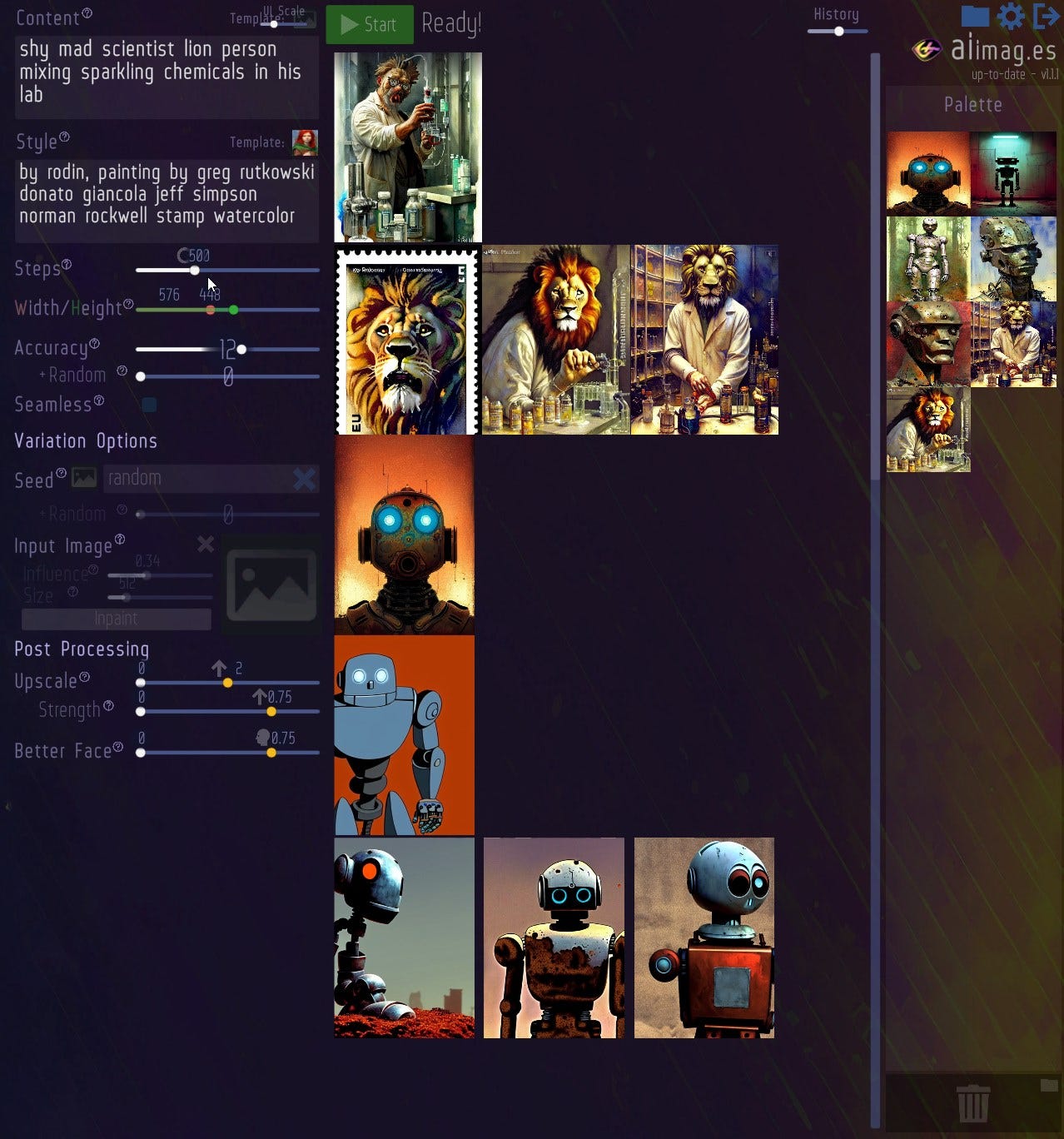

The whole thing got even more interesting on Sep 6, 2022, the version of Stable Diffusion implemented within Unity game engine projects was published on GitHub. The new version 1.1.0 saw its release two weeks ago. AI Image Generator GUI in my opinion is a game-changer as it’s a local install of a really impressive AI system. With minimal requirements of an RTX graphic card with a minimum of 4Gb of video memory, the allure is even bigger.

The application was developed by Daniel Hook aka Sunija from Munich, Germany while working on games for physical therapy. So… Thank you Daniel!

Installation

To install the AI Image Generator GUI go to sunija.itch.io/aiimages, download the 8Gb file, unpack it where you want, and run the aiimages.exe. The images generated are amazing, and it takes about 15 sec on my paltry RTX 2060.

If you are unsure about what to ask or how to, take a look at the video on the sunija.itch.io or take a look at some generated images with accompanying prompts at lexica.art to get you started. Copy the text prompt into the AI generator and give it a swirl.